57% Cost Cut: Model Routing for Multi-Agent Systems

One line of YAML. That was it. 1model: sonnet This single line in an agent's frontmatter reduced our per-run costs for one of the most-used agents in our

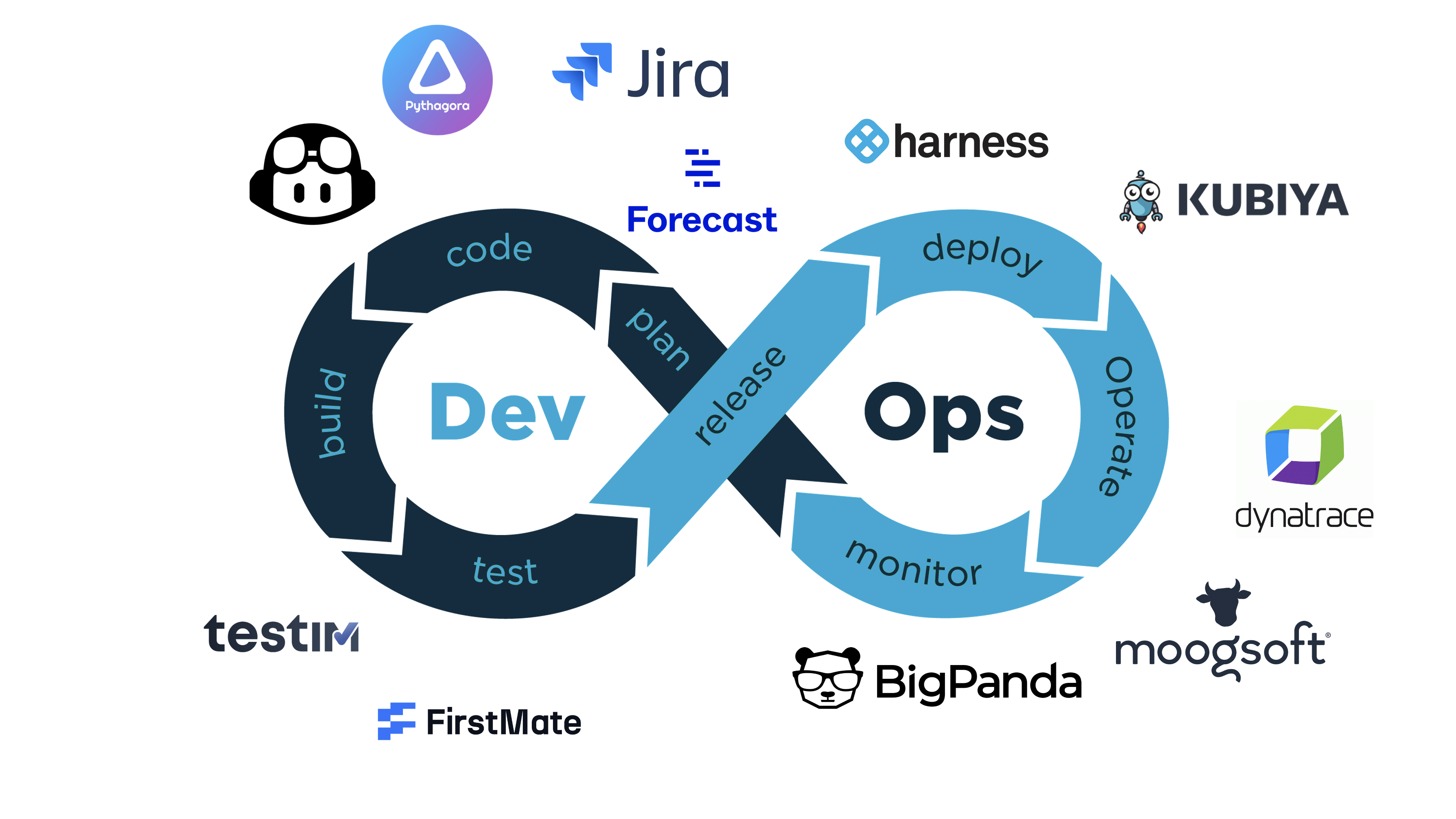

DevOps is a key success factor for modern software development and we have most definitely come across AI in one way or another. The intersection of AI and DevOps is reshaping how software development and operations teams work. Join us as we explore the transformative potential of AI for DevOps Engineers. In this first part of our blog series, we’ll explore the challenges DevOps faces today, how AI can help address them, and dive into the building blocks of AI for DevOps Engineers.

First of all, what's the problem we're trying to solve with AI?

We know DevOps as a set of practices that combines software development (Dev) and IT operations (Ops). The goal of DevOps is to shorten the systems development life cycle and provide continuous delivery with high software quality. DevOps is a key success factor for modern software development. However, when considering that not everyone has the same pace and level of DevOps maturity, we can see that there are still many challenges to overcome:

Manual, Error-Prone Processes: Many DevOps workflows still rely on manual interventions, which are time-consuming and prone to human error. This slows down operations and leads to inefficient use of infrastructure.

Delayed Issue Detection and Response: Without real-time data processing, teams struggle to detect and respond to issues quickly. This results in prolonged downtimes and inefficient incident management.

Skill Gaps Between Teams: Development and operations teams often have different technical expertise, making collaboration difficult and reducing overall productivity.

Scalability Issues: Scaling operations in dynamic environments is a constant struggle, especially without automation and AI-driven processes.

Security Vulnerabilities: The lack of real-time threat detection and automated defenses increases the risk of cyberattacks and data breaches.

Inability to Adapt to Rapid Changes: In today’s fast-evolving digital landscape, businesses that fail to adopt advanced AI-driven solutions risk falling behind competitors.

AI offers solutions to these challenges by augmenting human capabilities and automating repetitive tasks. Here’s how AI can transform DevOps:

At the end, AI is here for us to achieve a mature DevOps practice and simplify our workflows. AI comes in many forms and different flavors, whether we're using tools or creating our own AI applications and workflows. There are numerous AI tools which can be used to enhance DevOps practices.

Fortunately, there is a tool for every phase of the DevOps lifecycle. Here are some popular AI tools that can help us streamline your DevOps workflows:

Atlassian Intelligence is a powerful tool for project management and planning. It uses natural language processing to simplify task management and improve collaboration.

Features:

GitHub Copilot (or alternatives) acts as an AI-powered coding assistant, helping developers write and review code faster.

Features:

FirstMate FirstMate is an AI tool designed to enhance code quality and streamline testing processes.

Features:

Harness is an AI-driven platform for automating CI/CD pipelines and managing deployments.

Features:

Dynatrace Davis AI focuses on monitoring and operational efficiency, leveraging predictive and generative AI capabilities.

Features:

While there are many AI tools available to enhance DevOps workflows, building our own AI applications can give us more control, flexibility, and the ability to tailor solutions to our specific needs. Let’s explore the foundational building blocks for creating AI applications, starting with Generative AI and Large Language Models (LLMs).

AI has come a long way, and one subset of AI has gained traction like no other in the last few years:

Generative AI (GenAI) is a subset of AI focused on creating new content, such as text, images, and audio. It’s powered by Large Language Models (LLMs), which are trained on massive datasets to understand and generate human-like language.

Generative AI is not just about automation; it’s about enabling creativity and innovation at scale and solving tasks which would typically require human understanding and thinking.

LLMs are the backbone of Generative AI. These models, such as GPT-4, are trained on massive amounts of text data to understand and generate human-like language. Here’s what makes them special:

When we provide a query (prompt) to an LLM, it processes the input token by token and generates a response based on probabilities derived from its training data. While LLMs don’t “think” like humans, their ability to predict the most likely sequence of tokens makes them incredibly powerful for natural language tasks.

If exploring ways to run and experiment with LLMs, options include cloud-based solutions like OpenAI Playground or local tools such as Ollama. OpenAI Playground provides scalability and ease of use, while Ollama enables running models locally, offering greater privacy and offline capabilities. If you're interested in running your own LLMs, check out our blogpost on Empowering Local AI.

One limitation of LLMs is their reliance on pre-trained data, which may not include the latest or domain-specific information. This is where Retrieval-Augmented Generation (RAG) comes in.

RAG combines the content generation capabilities of LLMs with external knowledge retrieval. It works as follows:

Imagine a chatbot for a product support team. When a user asks a question, the chatbot retrieves information from the product documentation and combines it with its language generation capabilities to provide a precise and helpful response.

AI is revolutionizing DevOps by addressing its most pressing challenges and enabling teams to work smarter, not harder. Generative AI and LLMs are at the front of this transformation, offering new ways to create, collaborate, and innovate workflows. With techniques like Retrieval-Augmented Generation, we can push the boundaries of what's possible, combining the best of AI's generative and retrieval capabilities.

In the next part of this series, we'll dive deeper into building our own AI applications with frameworks like LangChain and explore how to ensure quality and security in LLM-powered systems. Stay tuned!

If you're hungry for more details, make sure to check out our video-recordings of our latest AI for Devops Engineers Workshop on YouTube:

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us