Reclaim Your Data: The Power of EU Cloud Services

In the evolving landscape of cloud computing, the reliance on US-based cloud services has become a topic of significant debate. I've observed a growing

In the ever-evolving field of software development, AI coding assistants have emerged as powerful tools to enhance productivity and code quality. Integrated into popular IDEs like Visual Studio Code, these intelligent assistants provide real-time code suggestions, debugging help, optimization tips or even live-chat conversations.

Leading the pack is GitHub Copilot, renowned for its contextual understanding and relevant code completions. However, there are several other noteworthy alternatives, including specialized tools like Codeium, Cody, Blackbox and Tabnine as well as self-hosted language models like Llama Coder with Ollama.

In this blog post, we will explore AI coding assistants, focusing on their integration with Visual Studio Code. We will introduce each tool, highlight their key features and evaluate their performance through a demo application. By the end, you'll have a clear understanding of how these AI assistants can transform your coding workflow and help you choose the best one for your needs.

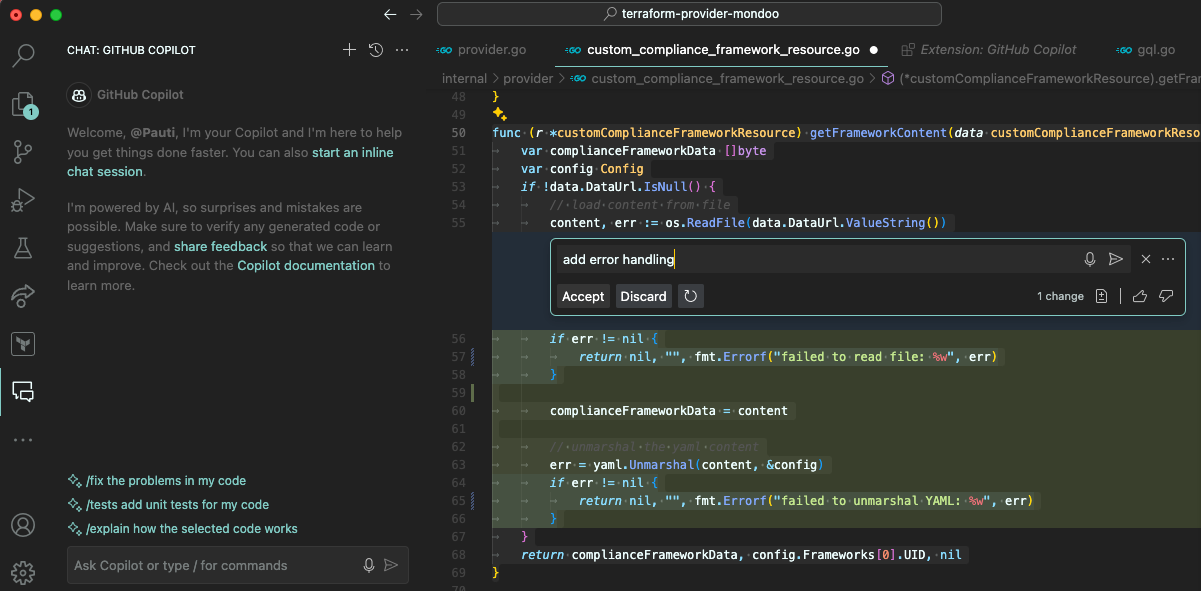

GitHub Copilot, developed by GitHub in collaboration with OpenAI, has quickly become one of the most prominent AI coding assistants available. Seamlessly integrated with Visual Studio Code, Copilot leverages the power of OpenAI's Codex model to provide intelligent code suggestions, complete code snippets, and even generate entire functions based on natural language descriptions.

Visual Studio Code Extension for GitHub Copilot

What’s possible with GitHub Copilot:

GitHub Copilot offers three plans: Individual ($10/month) for solo developers, students, and educators, featuring unlimited chat and real-time code suggestions; Business ($19/user/month) for organisations, adding user management, data exclusion from training, IP indemnity, and SAML SSO; and Enterprise ($39/user/month) for companies needing advanced customization, including fine-tuned models, pull request analysis, and enhanced management policies.

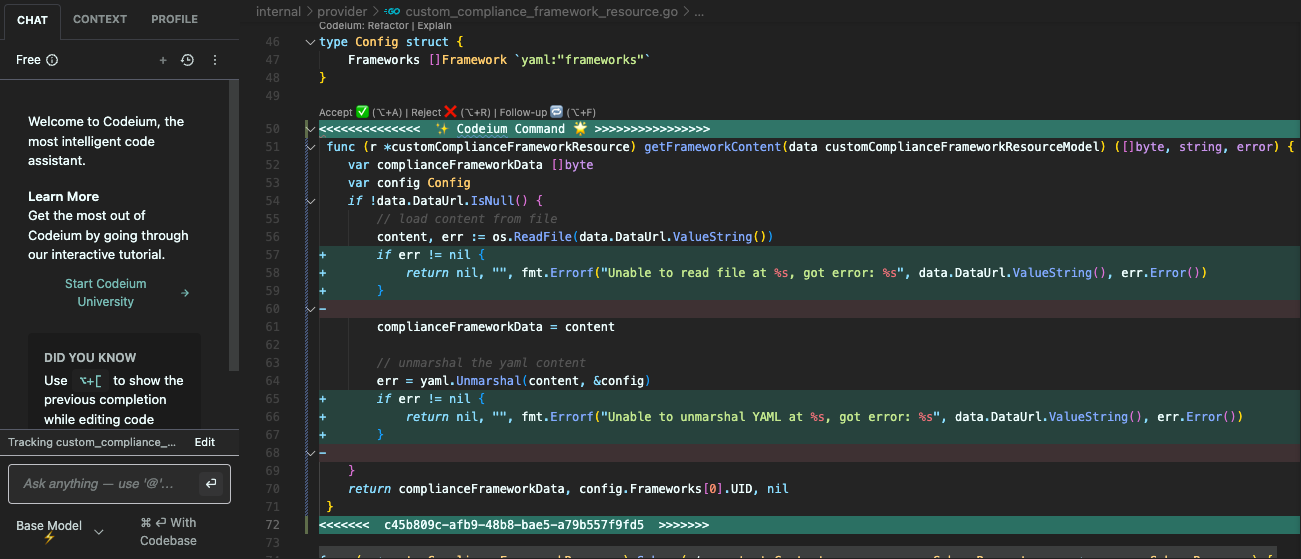

Just like GitHub Copilot, Codeium promises to be an advanced coding assistant with autocompletion, context awareness and chat - all for free. Codeium uses in-house trained models and offers free access to advanced code completion, search capabilities, and an interactive chat feature, making it an attractive alternative to Copilot. This service supports an extensive range of programming languages and seamlessly integrates with numerous IDEs.

Visual Studio Code Extension for Codeium

What’s possible with Codeium:

Individual users can enjoy Codeium's features for free, including code autocomplete, an in-editor AI chat assistant, unlimited usage with encryption in transit and assistance via Discord. For teams of up to 200 seats, Codeium offers a plan at $12 per seat per month, providing additional benefits like admin usage dashboard, seat management, advanced personalization, GPT-4 support, and organisation-wide zero-day retention, with upcoming features including document searching.

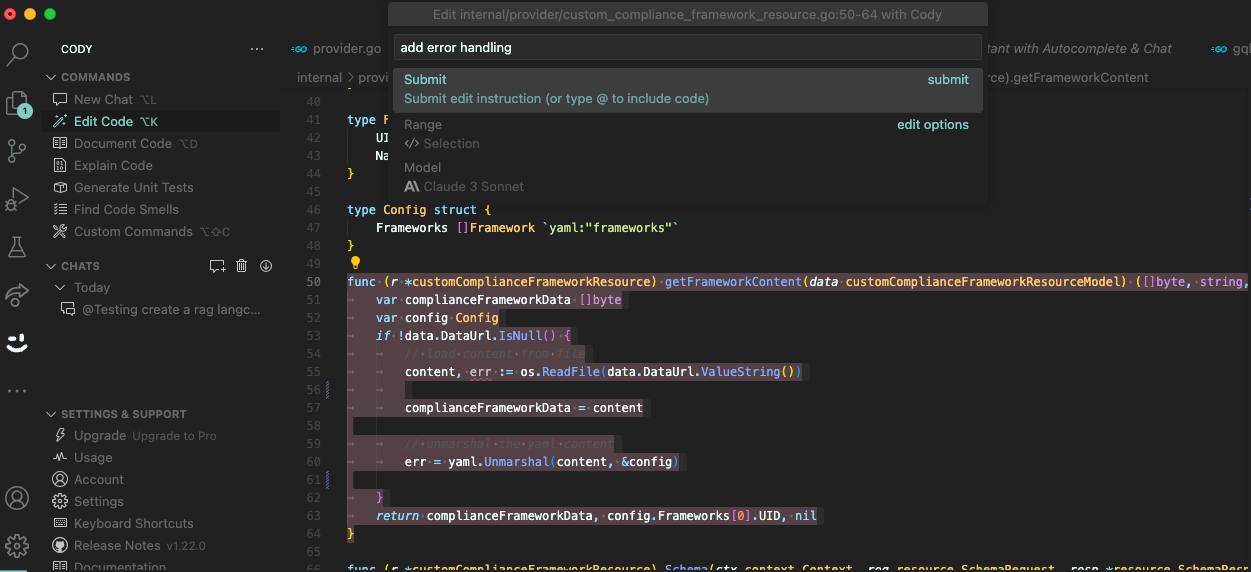

Sourcegraph Cody is an open-source AI coding assistant that provides AI-generated autocomplete, answers coding questions, and writes and fixes code. It uses different models and pulls context from both local and remote repositories, and features custom commands and swappable LLMs.

Visual Studio Code Extension for Cody

What’s possible with Cody:

The Free plan is designed for light usage, offering 500 autocompletions and 20 messages and commands per month. It provides basic code context and personalization for small local codebases, with default LLM support across chat, commands, and autocomplete. Upgrading to the Pro plan, priced at $9 per user per month, is ideal for professional developers and small teams with unlimited autocompletion and messages, along with personalization tailored for larger local codebases. It also offers multiple LLM choices for chat and default LLMs for autocomplete. The Enterprise plan, at $19 per user per month provides advanced personalization for enterprise codebases, flexible LLM choices, and additional enterprise features like flexible deployment options and admin security features.

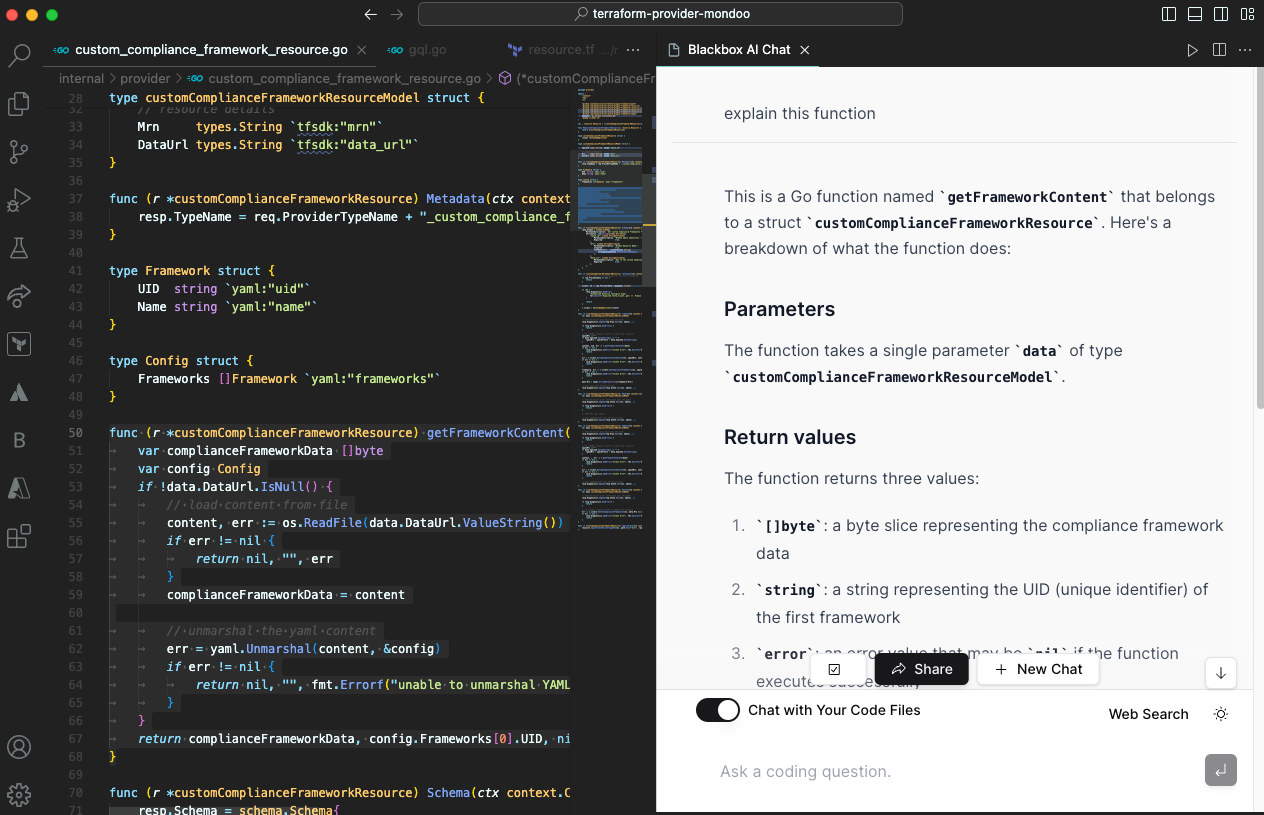

Blackbox AI is a comprehensive AI coding assistant with code suggestion, generation and code chat. The Visual Studio Code Extension further offers AI-generated commit messages and tracks local project changes. It focuses on code generation from natural language descriptions and includes a search function.

Visual Studio Code Extension for Blackbox

What’s possible with Blackbox:

Blackbox AI offers an onboarding call feature, potentially providing personalized support and guidance to users, as well as a monthly $9 Pro+ subscription for unlimited autocompletion and code chat.

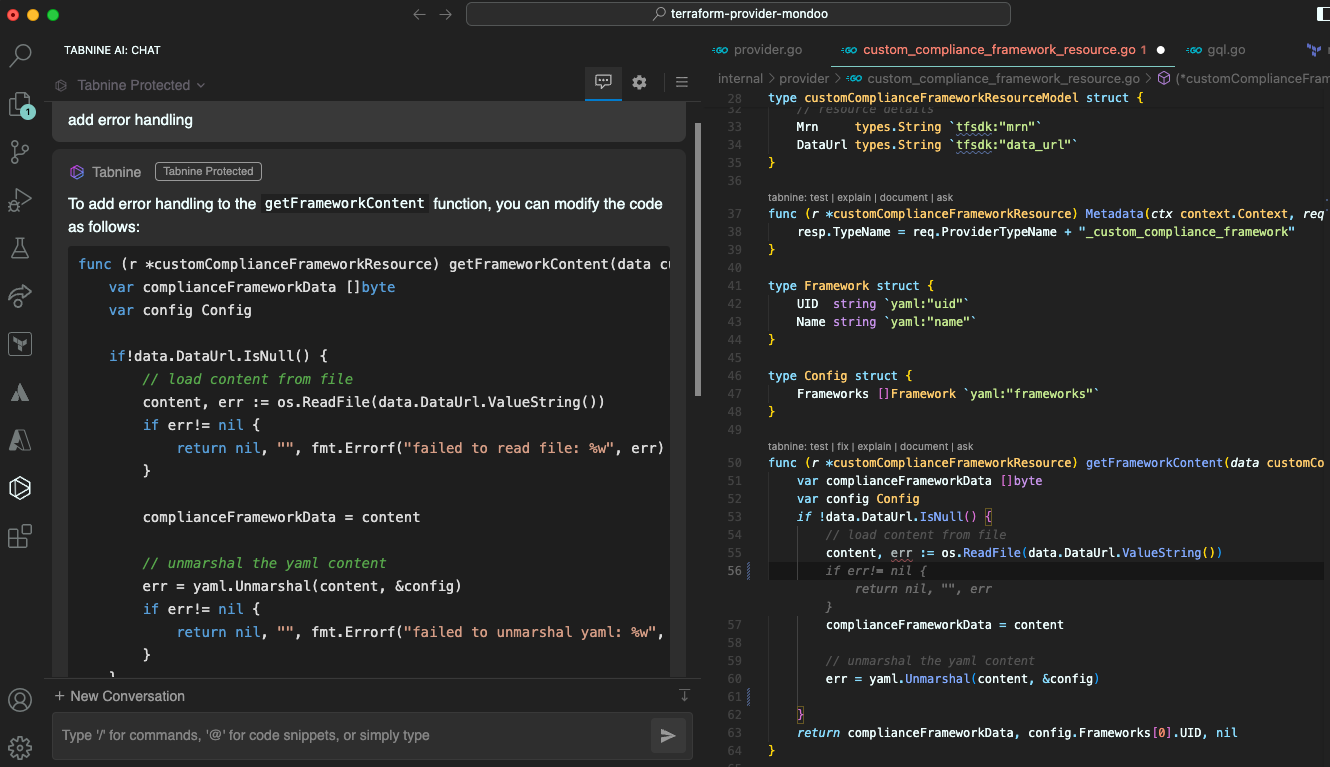

Tabnine stands out as an AI coding assistant that was trained on high quality public code repositories and offers both cloud-based and on-premises solutions. It utilizes proprietary LLMs tailored to organisations. It supports various IDEs as well as a wide range of languages and frameworks.

Visual Studio Code Extension for Tabnine

What’s possible with Tabnine:

Tabnine offers three pricing plans to suit different user needs. The Basic plan is free and includes an on-device AI code completion model suitable for simple projects. The Pro plan, at $12 per user per month with a 90-day free trial, offers advanced features such as best-in-class AI models, full-function code completions, natural language code generation, and SaaS deployment. The Enterprise plan, priced at $39 per user per month with a 1-year commitment, includes all Pro features plus enhanced personalization, private code repository models, flexible deployment options, priority support, and comprehensive team management and reporting tools.

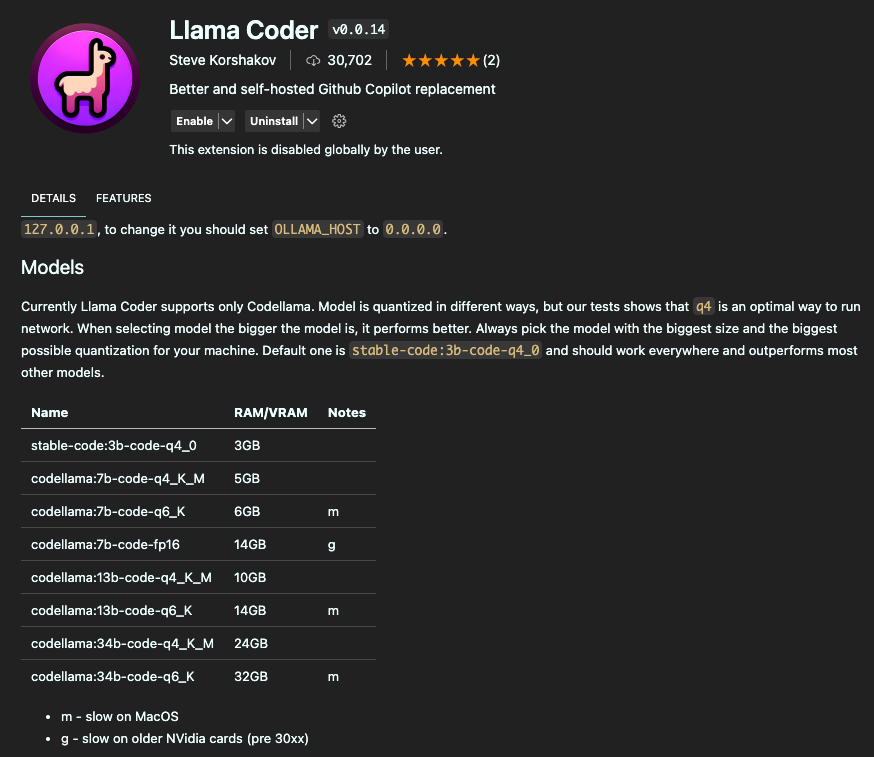

Llama Coder offers a robust, privacy-focused alternative to traditional cloud-based AI coding assistants like GitHub Copilot. Designed to run on-premise, this tool provides powerful AI capabilities directly on your machine, ensuring enhanced data privacy and low-latency performance, depending on the hardware.

Ollama is a platform that allows developers to run and experiment with advanced AI models, such as Codellama and Stable Code, locally on their hardware. This approach leverages the power of local computation to provide fast and secure AI-assisted coding.

What’s possible with Ollama:

Llama Coder is an extension for Visual Studio Code that integrates with Ollama to offer a self-hosted AI coding assistance. It provides intelligent code completions and suggestions, enhancing productivity and coding efficiency without compromising on privacy.

Visual Studio Code Extension for Llama

What’s possible with Llama Coder:

Llama Coder and Ollama are entirely free to use, with no associated costs for accessing its features. The only limitation is the hardware requirement: it performs best on Apple Silicon Macs (M1/M2/M3) or systems equipped with an RTX 4090 GPU. Users must ensure they have adequate RAM, with a minimum of 16GB recommended, to leverage Llama Coder's capabilities effectively. This makes Llama Coder a cost-effective solution for developers who already possess or are willing to invest in suitable hardware.

In this test scenario, we're evaluating the coding assistants' performance and support in developing a Langchain Research Augmented Generation (RAG) application with the use of Ollama LLMs in Python. Our focus is on how these assistants aid in coding tasks, particularly integrating the LLM into the Langchain framework.

GitHub Copilot started strong by auto-completing the entire function right after I wrote the framework and function definition. Most suggestions appeared quickly and were concise. The inline prompt chat can even explain, fix, or document code chunks. However, due to a lack of knowledge about the specific frameworks I was using, the assistant often neglected important details such as required parameters and framework-specific configurations. This sometimes led to incomplete or incorrect implementations, requiring manual adjustments to get the code working correctly.

Codeium also reacted quickly to my typing, providing accurate suggestions. Because Codeium can access my local repository, it already knew important aspects of the LangChain framework and generated a running RAG application in no time. This ability to leverage context from existing files in the repository significantly improved the relevance and accuracy of the suggestions. However, even without similar existing files, Codeium quickly found a solution, though it encountered some hiccups. There were occasional issues with less common use cases and specific configurations, but overall, Codeium proved to be a reliable assistant, capable of quickly generating functional code with minimal intervention.

With Cody, chat responses were quick and efficient. After providing a rough description of the application through the chat interface, the assistant swiftly implemented the necessary code and even added a small input feature, enabling dynamic questions. Thanks to Cody's ability to scan my codebase, it found a solution right away. However, without this additional context, Cody wouldn't respond as quickly and struggled to find the optimal solution, even after providing various “hints”.

Blackbox took a different approach compared to other assistants. Initially, it attempted to create an entirely different application and struggled to complete its code generation. Its process was notably slower and had less user interaction compared to other assistants, particularly with code completion, and it frequently failed to finish generating code.

Tabnine proved to be highly useful in crafting the application, although it primarily completed smaller sections of code due to the limitations of the free plan. Notably, Tabnine stands out as the only assistant to offer a more sophisticated user interface, allowing users to switch between models and adjust further settings. Despite being on the basic version, I had the flexibility to adjust deployment modes. However, there were instances where the tool failed to respond, particularly when used in local mode.

The Llama Coder Extension was straightforward to set up. Within a menu, I could select a specific model, though it was necessary to ensure that the model was installed locally with Ollama. The assistant itself needed some time to generate suggestions and sometimes produced meaningful and concise output, while at other times, it failed to grasp the context of the surrounding code altogether.

In the end, this comparison doesn’t represent the true effectiveness of the tools, as it always depends on coding style and performance for a specific language, as well as the selected model. However, it provides an opportunity to share our experiences with these artificial pair programmers, offering insights into their strengths and weaknesses in different scenarios.

To wrap up our exploration of coding assistants, it's crucial to recognize that there's a multitude of other tools out there, each with its own distinct features and innovative concepts. The tools we've discussed offer a glimpse into the diversity of options available to developers, showcasing advanced capabilities such as contextual understanding, custom commands, privacy-focused deployment, and more. However, the landscape of coding assistance is constantly evolving, with new tools emerging and existing ones evolving to meet the ever-changing demands of developers.

You are interested in our courses or you simply have a question that needs answering? You can contact us at anytime! We will do our best to answer all your questions.

Contact us